Introduction

DevOps and QA are in the midst of a revolution, reshaping how we build, test, and deliver software at breakneck speed. Traditional test automation, while effective, struggles to keep pace with the velocity of modern CI/CD pipelines.

Enter generative AI. Gen AI has created a paradigm shift that empowers QA teams to create self-optimising test suites that evolve alongside applications.

This blog explores how Generative AI is not just augmenting, but fundamentally redefining DevOps workflows by eliminating manual bottlenecks, predicting failures, and enabling truly adaptive testing.

1. The Automation Bottleneck in Traditional Testing

The Automation Bottleneck in Traditional Testing

Most test automation frameworks rely on static scripts that have the following characteristics:

Manual Script Updates: 30-40% of QA effort is spent fixing broken locators or outdated workflows.

Brittle Test Design: Scripts fail to adapt to UI/API changes, creating "false negatives" that erode trust.

Scalability Limits: Adding new tests slows pipelines, creating a tradeoff between coverage and speed.

Generate "flaky" results in dynamic environments.

Less flexibility in adopting new technologies into existing frameworks.

Static tests create automation debt—a hidden cost where maintaining outdated scripts consumes more time than writing new ones.

2. Generative AI is a Game-Changer for QA

Generative AI is a Game-Changer for QA

Generative AI (e.g., LLMs like GPT-4, Claude, Deepseek) doesn’t just execute tests. It creates and refines them.

How It Works:

1. Test Generation:

AI analyses user stories, APIs, and UI elements to auto-generate test cases.

Example: A GPT-4 model trained on Jira tickets writes Cucumber scripts for BDD.

2. Self-Optimisation:

AI identifies redundant or low-value tests and prunes them.

Prioritises tests based on risk (e.g., recent code changes, and past failures).

3. Adaptive Execution:

Dynamically adjusts test parameters (e.g., wait times, locators) during runtime.

We know of a fintech company that used Generative AI to auto-generate 1,200+ test scenarios for a payment gateway by parsing Swagger docs, reducing test design time by 70%.

3. Integrating Generative AI into Automating DevOps Pipelines

Integrating Generative AI into Automating DevOps Pipelines

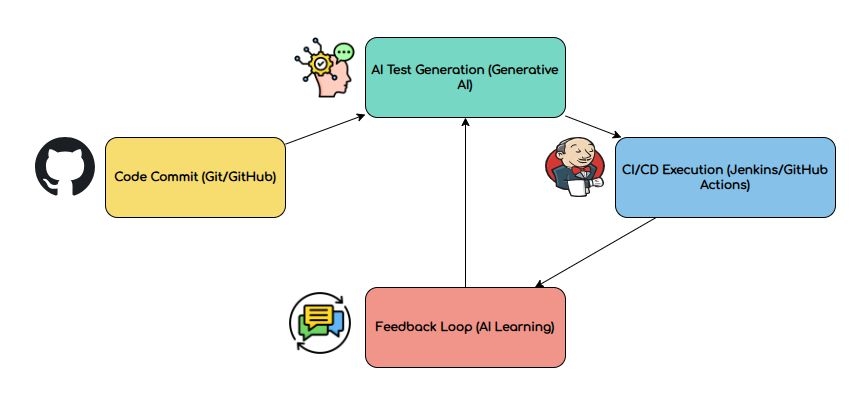

Code Commit → AI Test Generation → Risk-Optimised Execution → Automatic Feedback Loop

Diagram Flow:

Code Commit: The developer pushes changes to GitHub.

AI Test Generation:

Generative AI scans PR descriptions, code diffs, and existing tests.

Creates new tests or updates existing ones.

Review Step: AI-generated scripts are reviewed by QA engineers before execution.

CI/CD Execution:

Runs only high-priority, risk-adjusted tests in parallel.

Selenium Grid is used for more efficient parallel execution.

Feedback Loop:

AI analyses test results to refine future test suites.

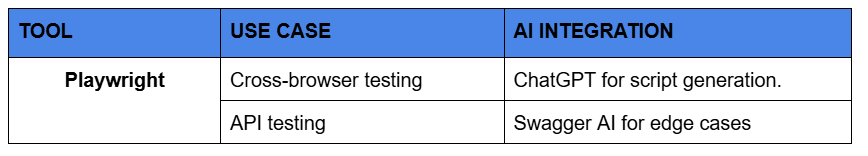

Tools to Highlight:

AI Test Orchestration:

Tricentis Tosca: Uses NLP to auto-generate test cases from user stories.

Selenium Grid + AI: Parallel test execution with auto-scaling cloud resources.

CI/CD Integration:

Jenkins AI Plugins: Trigger tests based on code complexity scores.

GitHub Actions: Auto-comment PRs with AI-generated test summaries.

4. The Future: Fully Autonomous QA Pipelines

The Future: Fully Autonomous QA Pipelines

Generative AI is paving the way for pipelines that:

Predictive Analytics:

AI forecasts test flakiness before execution, rerunning only stable tests.

Example: An ML model trained on past pipeline data predicts failures with 92% accuracy.

Self-Healing Infrastructure:

AI auto-rolls back deployments if performance thresholds are breached.

Auto-Documentation:

Tools like Swagger AI auto-generate test reports and audit trails for compliance.

Accuracy Validation:

AI-generated scripts undergo an accuracy check before deployment.

By 2025, AI will automate 80% of test maintenance tasks, freeing QA engineers to focus on exploratory testing and strategic quality advocacy.

5. Implementing Gen-AI in Automation: A Step-by-Step Guide

Implementing Gen-AI in Automation: A Step-by-Step Guide

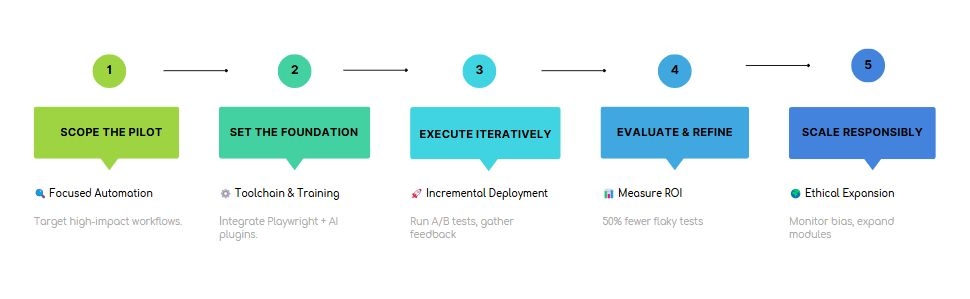

From pilot to production? How do you scale AI-driven automation without breaking your pipeline? Let's get into it.

Step 1: Identify High-Impact Automation Opportunities

Prioritise use cases where AI adds maximum value:

Repetitive Tasks: Regression tests, smoke tests, and integration tests.

High-Churn Areas: Modules with frequent UI/API changes.

Step 2: Choose the Right Toolchain

Step 3: Build a Feedback-Driven Pipeline

- Collect Data: Test results, and user behavior logs.

- Train Models: Use TensorFlow Extended (TFX) to refine AI logic.

- Validate & Adapt: Run A/B tests comparing AI vs. manual scripts.

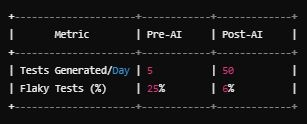

Step 4: Measure ROI with Automation-Specific KPIs

Step 5: Foster an AI-Ready QA Culture

Upskill Teams: Train engineers on prompt engineering.

Collaborate Early: Involve AI in sprint planning.

6. Challenges and Ethical Considerations

Challenges and Ethical Considerations

Bias in Training Data: Poorly curated datasets can lead to flawed test generation.

Over-Reliance on AI: Human oversight remains critical for complex scenarios.

Security: Ensuring AI-generated tests don’t expose sensitive data.

Manual Review Requirement: QA engineers should manually review AI-generated test scenarios to ensure correctness and relevance.

Conclusion

Generative AI is not just another tool. It is a strategic enabler for DevOps teams drowning in automation debt. By embracing self-optimising test suites, organisations can shift from reactive testing to proactive quality assurance, where AI and humans collaborate to deliver flawless software at DevOps speed.