Introduction

Everything has a beginning. The convergence of DevOps practices with machine learning operations (MLOps) has thus begun to be a game-changer for deploying scalable, reliable, and maintainable machine learning systems in production.

Just as DevOps transformed software development by promoting continuous integration and delivery, MLOps brings the same principles to the world of machine learning, helping teams streamline the end-to-end lifecycle of models—from data collection and training to deployment and monitoring.

Here is the story of how MLOps and DevOps practices help each other in machine learning production.

Understanding MLOps and DevOps

Understanding MLOps and DevOps

Machine learning (ML) is revolutionising industries, but getting ML models to run smoothly in production is no small feat. This is where MLOps comes into play. MLOps integrates machine learning into the DevOps pipeline, adapting its principles to address the unique needs of ML systems. The goal is to simplify taking ML models from development to production, ensuring they operate reliably at scale and are continually improved over time.

To understand MLOps, let’s first look at the key principles of DevOps, which has fundamentally transformed the software development lifecycle. DevOps promotes collaboration between development and operations teams, automates processes, and creates continuous feedback loops for faster, more reliable software releases. MLOps builds on these principles. Yet, it introduces additional complexity due to the nature of machine learning. Unlike software, where changes are linear, ML models evolve iteratively and require unique testing, monitoring, and refinement strategies.

The connection between MLOps and DevOps lies in the shared principles of collaboration, automation, and continuous improvement. Practices like version control, CI/CD pipelines, and automated deployment are foundational in both frameworks.

Core Similarities and Differences

Core Similarities and Differences

- Team Structure and Collaboration

Both DevOps and MLOps discuss a common explanation for breaking down silos. Simply meaning the two forms of Ops try to bring out collaboration. However, MLOps requires a more specialised team structure. In a typical DevOps environment, developers and operations engineers work closely to deploy software. Data scientists are also involved in MLOps. These specialists focus on machine learning models, not just code. As a result, MLOps teams are more cross-functional, combining expertise from data science, engineering, and operations to develop, train, and deploy ML models effectively.

- Development Process: Iterative vs. Linear

DevOps's development pipeline is linear—new features or fixes are added through well-defined stages. MLOps, however, follow an iterative approach. Machine learning models evolve continuously, undergoing regular retraining, testing, and refinement based on performance feedback and new data. This creates a circular pipeline, where models are constantly improved, reflecting the experimental nature of machine learning.

- Testing Requirements

While automated testing is key in both DevOps and MLOps, MLOps goes a step further to address the complexities of machine learning. In addition to validating code functionality, testing in MLOps involves evaluating model accuracy, prediction quality, and bias detection. Given the dynamic nature of ML models, this process includes cross-validation and benchmarking against real-world scenarios. This is to ensure the model generalises well and meets business objectives.

- Monitoring and Metrics

Monitoring in DevOps focuses on traditional software health indicators like uptime and server load. In MLOps, however, it’s also about model performance. Teams track prediction quality, model drift (when a model's performance degrades due to changes in data), and other metrics to ensure models remain effective over time. Ongoing monitoring also helps ensure that the model contributes to business KPIs, aligning technical performance with business value.

The Business Case for MLOps with DevOps

The Business Case for MLOps with DevOps

The integration of DevOps principles into MLOps serves as a major driver for transforming ML projects into sustainable, production-grade services. This includes practices such as automation, CI/CD pipelines, and infrastructure as code (IaC) bring efficiency, standardisation, and reliability to ML workflows. For instance, automated testing in DevOps ensures ML models are validated at every stage, meaning that they reduce errors to ensure consistency.

From a C-suite and business standpoint, integrating DevOps practices into MLOps streamlines the journey of ML models from conception to deployment. This approach ensures that models drive tangible value at scale.

DevOps practices address the operational challenges that often hinder machine learning production. Think of something like this; automation eliminates manual integration in model retraining, testing, and deployment. This reduces the time-to-market of products, enhances user experience, improves the quality of predictions, and reduces risk.

CI/CD pipelines enable businesses to roll out new features or updates, ensuring agility in responding to market dynamics. Scalability becomes more achievable through infrastructure-as-code (IaC), which allows ML models to run on cloud-native, elastic environments optimised for cost and performance.

Key DevOps Practices Driving Scalable MLOps

Key DevOps Practices Driving Scalable MLOps

- CI/CD - Continuous Integration and continuous deployment ensure ML models are automatically tested, validated, and deployed, reducing manual errors and speeding up updates.

- Monitoring and Logging - Cross-functional teams work together using version control systems, enabling simultaneous contributions to the same codebase while avoiding conflicts and boosting productivity.

- Collaboration and version control - Teams work together with version control, enabling version simultaneous contributions while avoiding conflicts.

- Infrastructure as code (IaC) - IaC automates the provisioning and management of infrastructure, enabling consistent, efficient, and scalable environments for ML workflows and deployments.

- Automation - Automation evolves from manual model management to fully automated ML and CI/CD pipelines, allowing models to be retrained, validated, and redeployed without human intervention.

Essential MLOps Components

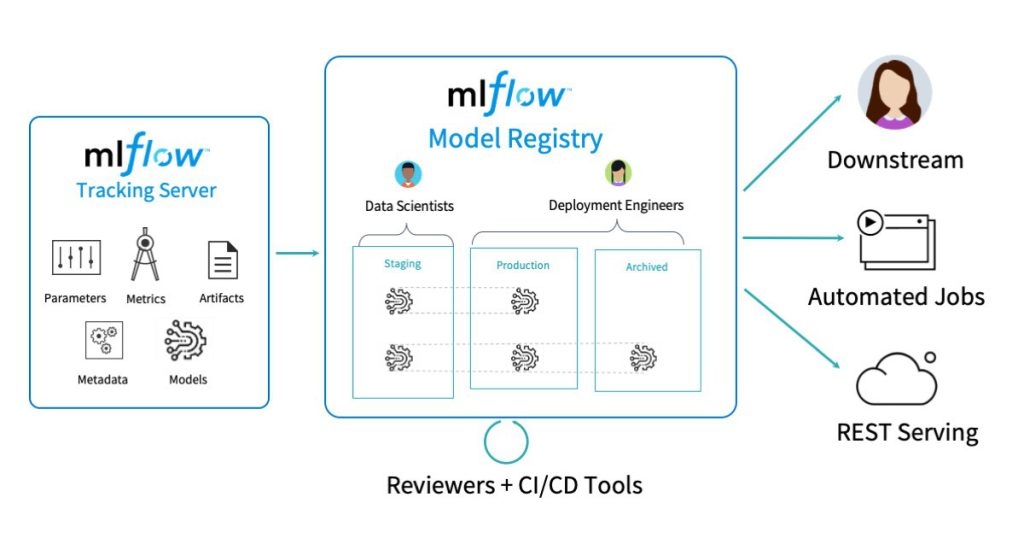

Model management in MLOps involves creating, training, and validating models using tools like MLflow and DVC for version control and Apache Airflow for automating data pipelines. These practices align with DevOps by ensuring reproducibility, collaboration, and automation for scalable ML systems.

Training and validation refine model performance with cloud platforms like AWS SageMaker and techniques like cross-validation. Integrating these into CI/CD pipelines ensures efficiency and consistency, mirroring DevOps practices to speed up model development and scaling.

Deployment and monitoring integrate models into production with automated pipelines and serving tools like Tensorflow Serving. Monitoring tools like Prometheus and drift detection ensure models stay relevant, while compliance measures secure data and meet regulations. These practices streamline MLOps, enhancing scalability, reliability, and continuous improvement.

Tools and Challenges for ML Leads when integrating DevOps practices with MLOps

Tools and Challenges for ML Leads when integrating DevOps practices with MLOps

Tools:

- MLflow

- Kubeflow

- Qdrant

- LangChain

- Comet ML

- Prefect

- Weights & Biases

- Metaflow

- Kedro

- Pachyderm

- Data Version Control (DVC)

- LakeFS

- Feast

- Featureform

Challenges:

Integrating DevOps with MLOps presents several challenges for ML leads. Data management is complex, as maintaining data quality and automating pipelines across the ML lifecycle can be difficult. Security is a major concern, with platforms like Azure ML and BigML being vulnerable to attacks such as phishing and exposed API keys, which can lead to data or model exfiltration. Compliance is another challenge, requiring robust systems for data security and auditability to prevent unauthorised access.

Ensuring that ML models are fair and unbiased is crucial, especially in sensitive applications like hiring or lending. Model lifecycle management also presents difficulties, especially around tracking versions and preventing model drift, which can be exploited by attackers if not properly managed. Hiring professionals with expertise in both machine learning and DevOps is often a struggle, and bridging this gap requires cross-team collaboration.

With the vast number of available tools, ensuring seamless integration across the entire ML lifecycle is a challenge. Poor integration or lack of proper access controls can expose organisations to security risks, as shown by attacks against platforms like Google Cloud Vertex AI, where unauthorised access can lead to data or model theft.

Conclusion

Conclusion

To wrap it up, MLOps is a key driver in scaling machine learning in production. According to Gartner, MLOps is a subset of ModelOps, which focuses on the operationalisation of all types of AI models, while MLOps specifically addresses the operationalisation of ML models.

Applying DevOps principles to machine learning streamlines operations and automates processes. Once the pipeline is set up, the tasks become automated, and the focus shifts to monitoring the models. With an intuitive UI, the process becomes more manageable and efficient. Though it may appear as a new concept in our eyes, MLOps is essentially tailored for machine learning projects, allowing teams to deploy, enhance, and scale models.